Project summary and conclusion

I’m really proud that I got as far as I did this summer. I learned a lot of new things and even though it was not a particularly advanced pupil detection algorithm, I implemented it myself from a paper. Also, the app actually works!

The ingredients are:

- Apple iPad 10.5” (2017) for running the iOS app and providing high-resolution camera input at 30 fps,

- Swift, Objective-C and C++ (and Python for experiments in OpenCV outside the app),

- Apple’s SpriteKit for the UI,

- Apple’s hardware-based AVCapture for face detection,

- Dlib for five-point face landmark detection (eye corners) and face alignment normalization,

- Pupil detection algorithm implemented from the paper Automatic Adaptive Center of Pupil Detection Using Face Detection and CDF Analysis (Mansour and Shanbezadeh, 2010) using OpenCV,

- Pearson’s Product Moment Correlation Coefficient for calculating the correlation between pupil and sprite movements.

I would really have liked to do some testing with infants this summer but the app was far from ready for testing when people with infants were visiting us. I hope that I can make it happen during the autumn.

Here’s the project report.

Future enhancements

There are a lot of things that I would like to do, going forward. A few of which are:

- Release it on the iOS App Store,

- Enabling parents to use their own photos as moving objects for investigating what images/things/people their child is drawn to,

- Small animations in the moving images, perhaps by slowly toggling between two-three photos.

- Randomized pairing of objects with a long-term score board, in our case, finally settling what we’ve always expected: our cats would win over us, the parents.

- Adjust for the head tilt angle by combining the corresponding proportion of horizontal and vertical pupil movement. That way, detection should be completely rotation invariant (although vertical smooth pursuits for infants take longer to develop than horizontal pursuits).

- Make the hearts move faster with more correlation. As it is now the hearts only move when average correlation over the last 30 frames is at least 0.7. I use correlation to control opacity for the hearts indicating that the user is following the moving objects so it is unexpected that the hearts are not moving at the same time that the indicators are showing.

- Investigate how the pupil detection works with make-up, different skin tones, eye configurations, etc. Starting with a verification that I get roughly the same results as the original paper got when running the algorithm on the BioID dataset.

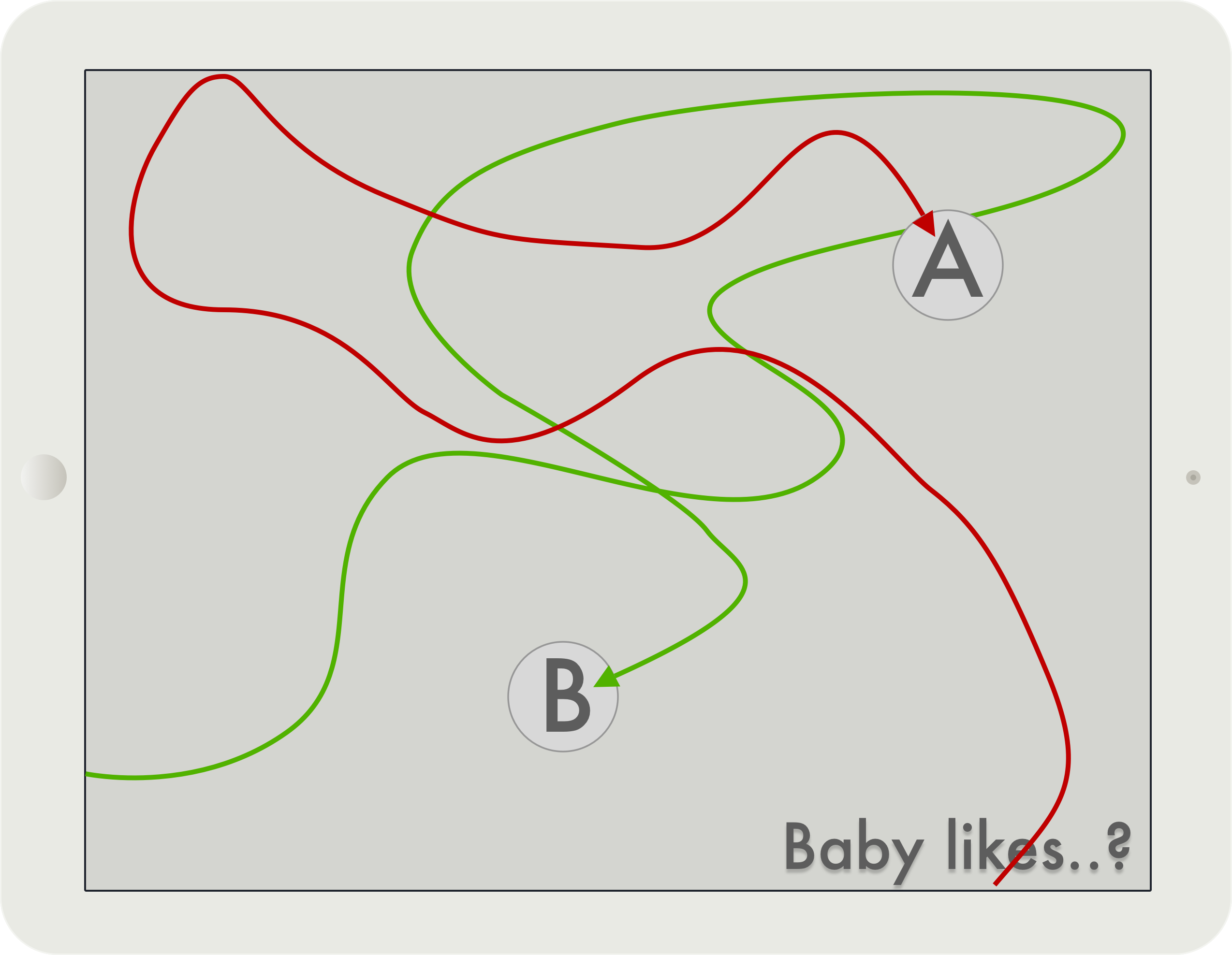

Also, since I can produce correlations in the vertical axis as well, it would be really fun to pursue the other sketch in my project specification:

A game based on that could be really fun for toddlers.